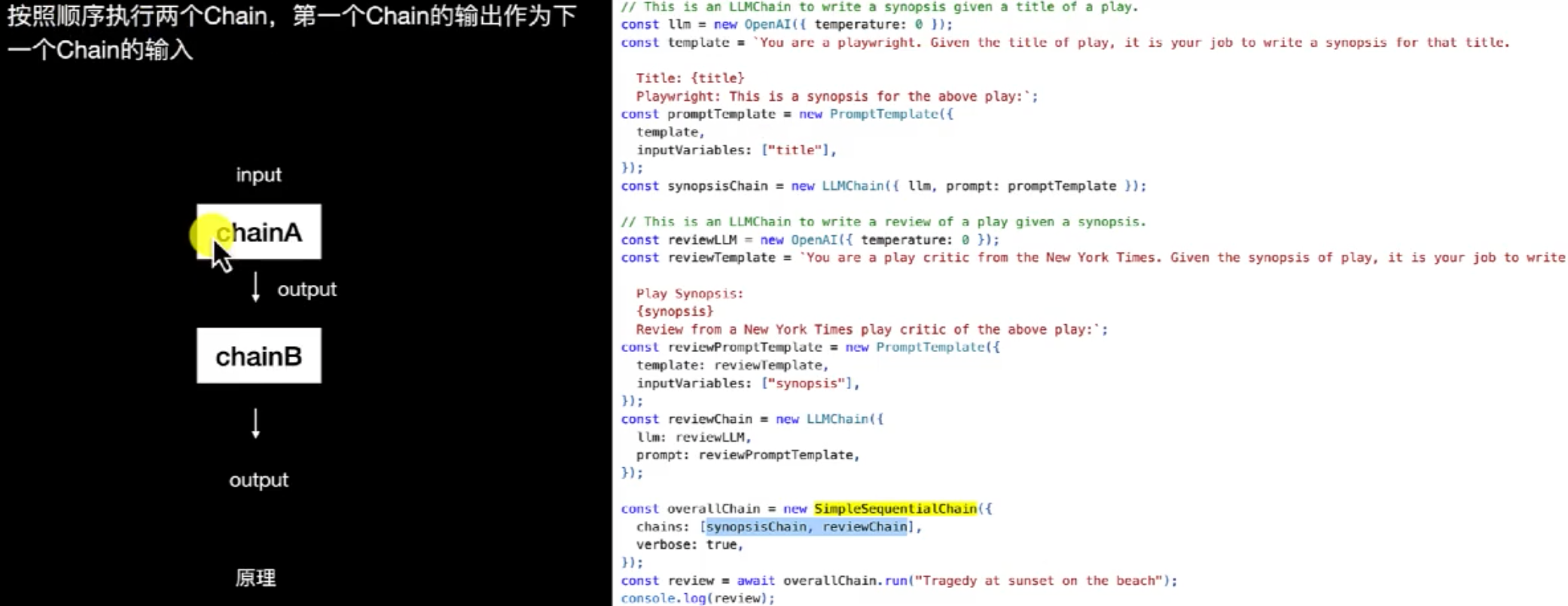

sequentialChain

这里我们介绍下Langchain中的sequentialChain;顾名思义,sequentialChain是串行链;

sequentialChain

核心是第一个大模型处理完之后,输出作为下个大模型的输入,其实优点类似上节介绍的documentChain中的refineChain;

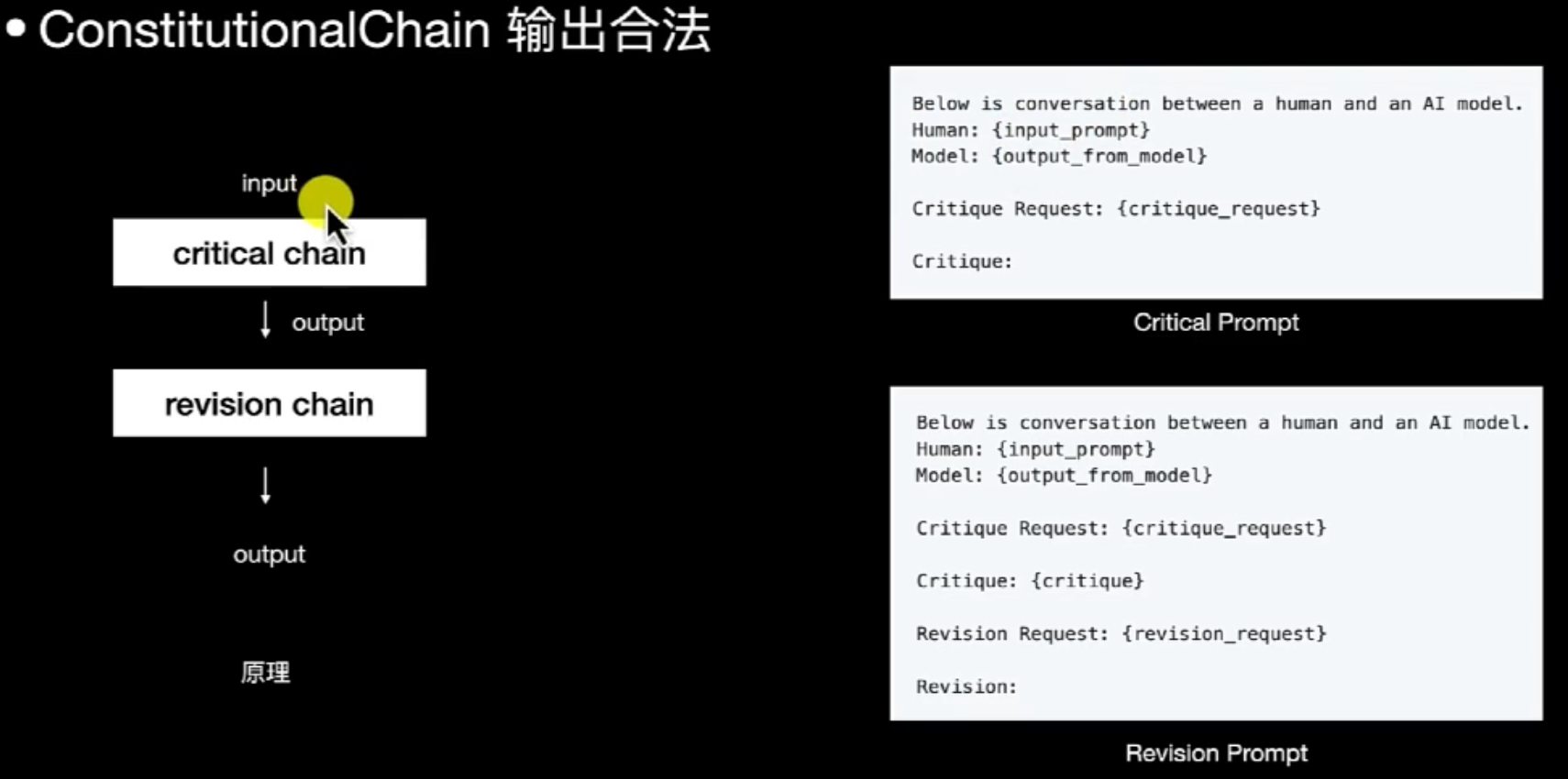

Langchain有2种典型SequentialChain: • ConstitutionalChain 宪法链,保证输出结果符合法律规定,输出合法; • OpenAlModerationChain 合规链,检测输入内容是否符合伦理道德,输入合规;

ConstitutionalChain

核心是构建了下面这个prompt:

ConstitutionalChain 代码:

from typing import List, Optional, Tuple

from langchain.chains.constitutional_ai.prompts import (

CRITIQUE_PROMPT,

REVISION_PROMPT,

)

from langchain.chains.constitutional_ai.models import ConstitutionalPrinciple

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

from langgraph.graph import END, START, StateGraph

from typing_extensions import Annotated, TypedDict

llm = ChatOpenAI(model="gpt-4o-mini")

class Critique(TypedDict):

"""Generate a critique, if needed."""

critique_needed: Annotated[bool, ..., "Whether or not a critique is needed."]

critique: Annotated[str, ..., "If needed, the critique."]

critique_prompt = ChatPromptTemplate.from_template(

"Critique this response according to the critique request. "

"If no critique is needed, specify that.\n\n"

"Query: {query}\n\n"

"Response: {response}\n\n"

"Critique request: {critique_request}"

)

revision_prompt = ChatPromptTemplate.from_template(

"Revise this response according to the critique and reivsion request.\n\n"

"Query: {query}\n\n"

"Response: {response}\n\n"

"Critique request: {critique_request}\n\n"

"Critique: {critique}\n\n"

"If the critique does not identify anything worth changing, ignore the "

"revision request and return 'No revisions needed'. If the critique "

"does identify something worth changing, revise the response based on "

"the revision request.\n\n"

"Revision Request: {revision_request}"

)

chain = llm | StrOutputParser()

critique_chain = critique_prompt | llm.with_structured_output(Critique)

revision_chain = revision_prompt | llm | StrOutputParser()

class State(TypedDict):

query: str

constitutional_principles: List[ConstitutionalPrinciple]

initial_response: str

critiques_and_revisions: List[Tuple[str, str]]

response: str

async def generate_response(state: State):

"""Generate initial response."""

response = await chain.ainvoke(state["query"])

return {"response": response, "initial_response": response}

async def critique_and_revise(state: State):

"""Critique and revise response according to principles."""

critiques_and_revisions = []

response = state["initial_response"]

for principle in state["constitutional_principles"]:

critique = await critique_chain.ainvoke(

{

"query": state["query"],

"response": response,

"critique_request": principle.critique_request,

}

)

if critique["critique_needed"]:

revision = await revision_chain.ainvoke(

{

"query": state["query"],

"response": response,

"critique_request": principle.critique_request,

"critique": critique["critique"],

"revision_request": principle.revision_request,

}

)

response = revision

critiques_and_revisions.append((critique["critique"], revision))

else:

critiques_and_revisions.append((critique["critique"], ""))

return {

"critiques_and_revisions": critiques_and_revisions,

"response": response,

}

graph = StateGraph(State)

graph.add_node("generate_response", generate_response)

graph.add_node("critique_and_revise", critique_and_revise)

graph.add_edge(START, "generate_response")

graph.add_edge("generate_response", "critique_and_revise")

graph.add_edge("critique_and_revise", END)

app = graph.compile()

constitutional_principles=[

ConstitutionalPrinciple(

critique_request="Tell if this answer is good.",

revision_request="Give a better answer.",

)

]

query = "What is the meaning of life? Answer in 10 words or fewer."

async for step in app.astream(

{"query": query, "constitutional_principles": constitutional_principles},

stream_mode="values",

):

subset = ["initial_response", "critiques_and_revisions", "response"]

print({k: v for k, v in step.items() if k in subset})

OpenAlModerationChain

原理和ConstitutuinalChain类似,这里直接看代码:

import { OpenAIModerationChain, LLMChain } from "langchain/chains";

import { PromptTemplate } from "langchain/prompts";

import { OpenAl } from "langchain/llms/openai";

// Define an asynchronous function called run

export async function run() {

// A string containing potentially offensive content from the user

const badString = "Bad naughty words from user";

try {

// Create a new instance of the OpenAIModerationChain

const moderation = new OpenAIModerationChain();

// Send the user's in the moderation chain and wait for the result

const { output: badResula} = await moderation.call({

input: badString,

throwError: true, // If set to true, the call will throw an error when the moderation

});

// If the moderation chain does not detect violating content, it will return the origin

const model = new OpenAI({ temperature: 0 }):

const template = "Hello, how are you today {person}?";

const prompt = new PromptTemplate({ template, inputVariables: ["person"] });

const chainA = new LLMChain({ llm: model, prompt });

const resA = await chainA.call({ person: badResult }):

console. log({ resA }):

} catch (error) {

// If an error is caught, it means the input contains content that violates OpenAI TOS

console.error ("Naughty words detected!");

}

}